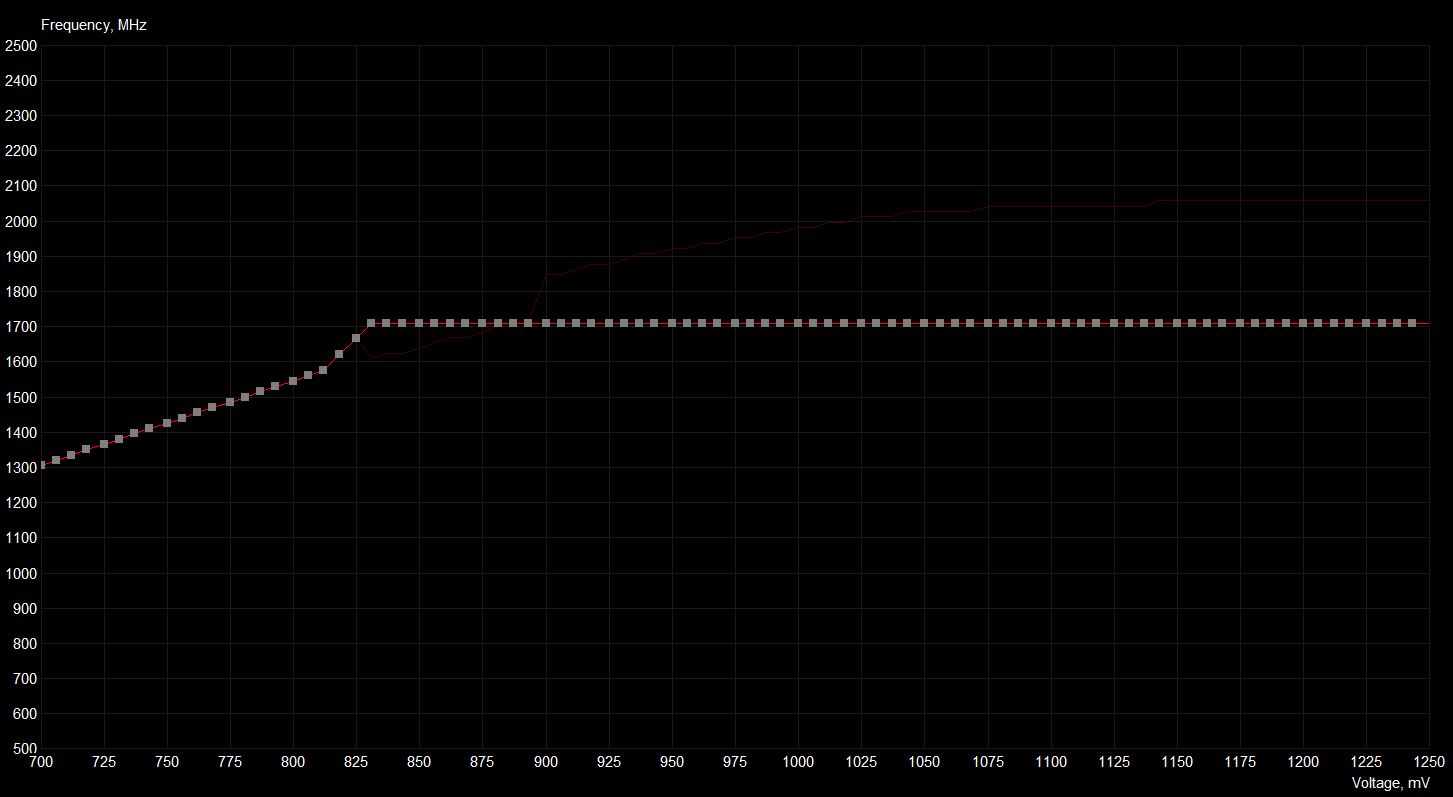

I reduced further at what voltage the GPU reaches the maximum frequency. 887 mV => 1905 MHz (start of the line)

Here are the result:

GPU max voltage: 0.894 V

GPU max temp: 73°C (still the same but again... short run and fans are indeed very efficient !)

GPU max freq: 1905 MHz

FPS average: 63.7 (+- 8.5)

So you are saying that I should have a line much lower ? Like start the line at 887 mV => 1800 MHZ ?

Or should I continue to find the lowest voltage possible for 1905 MHz ?

Thanks for being here.

Edit: this time I reduced the maximum frequency. I don't see any change in perf (according to those FPS numbers) since I started changing the freq(volt) curve...

GPU max voltage: 0.906 V

GPU max temp: 73°C

GPU max freq: 1755MHz

FPS average: 62.0 (+-7.6)

Another try, overclocking at low voltage but maximum frequency was reduced to 1710 MHz. Curve:

GPU max voltage: 0.844 V

GPU max voltage: 0.844 V

GPU max temp: 70°C (gained 3°C yeah !)

GPU max freq: 1710 MHz

FPS average: 62.0 (+-8.0)

Let's see if this gets stable when I am playing long sessions.

Finally, using a curve similar to the one you proposed in another topic (1620 MHz @844 mV, I got those Firestrike results (I posted in the other topic as well).

Why my Graphics are so low compared to yours (~20000) ? I saved 3°C but don't reach your nice results. (of course the temperature depends on the room temp, you can tell me if you did it outdoor in December haha)

Question: If I have similar performance at the same voltage for 1700 or 1900 MHz, which frequency should I choose ? Whatever ?

Here are the result:

GPU max voltage: 0.894 V

GPU max temp: 73°C (still the same but again... short run and fans are indeed very efficient !)

GPU max freq: 1905 MHz

FPS average: 63.7 (+- 8.5)

So you are saying that I should have a line much lower ? Like start the line at 887 mV => 1800 MHZ ?

Or should I continue to find the lowest voltage possible for 1905 MHz ?

Thanks for being here.

Edit: this time I reduced the maximum frequency. I don't see any change in perf (according to those FPS numbers) since I started changing the freq(volt) curve...

GPU max voltage: 0.906 V

GPU max temp: 73°C

GPU max freq: 1755MHz

FPS average: 62.0 (+-7.6)

Another try, overclocking at low voltage but maximum frequency was reduced to 1710 MHz. Curve:

GPU max temp: 70°C (gained 3°C yeah !)

GPU max freq: 1710 MHz

FPS average: 62.0 (+-8.0)

Let's see if this gets stable when I am playing long sessions.

Finally, using a curve similar to the one you proposed in another topic (1620 MHz @844 mV, I got those Firestrike results (I posted in the other topic as well).

| Chassis and Spec | Firestrike 3DMark | Graphics | Physics | Avg. GPU Temps | URL |

|---|---|---|---|---|---|

| * Nova 15" / R5 3600 / RTX 2070 @ 1620 at 844 mV | 17092 | 18909 | 18648 | 63°C | Link |

Question: If I have similar performance at the same voltage for 1700 or 1900 MHz, which frequency should I choose ? Whatever ?

Last edited: